RStudio, new open-source IDE for R

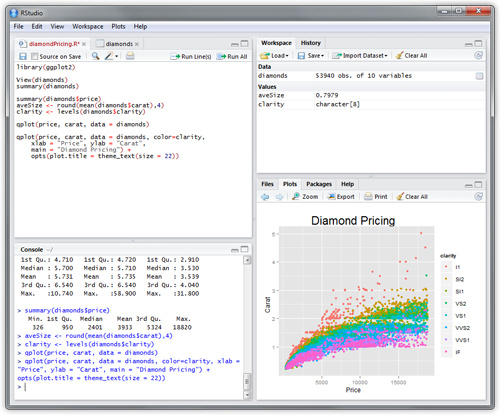

RStudio is a new open-source IDE for R which we’re excited to announce the availability of today. RStudio has interesting features for both new and experienced R developers including code completion, execute from source, searchable history, and support for authoring Sweave documents.

RStudio runs on all major desktop platforms (Windows, Mac OS X, Ubuntu, or Fedora) and can also run as a server which enables multiple users to access the IDE using a web browser.

A couple of screenshots (click here for more screenshots):

The version of RStudio available today is a beta (v0.92) and is released under the GNU AGPL license. We’re hoping for lots of input and dialog with the R community to help make the product as good as it can be!

More details on the project as well as download links can be found at: http://www.rstudio.org.

Michael Kane on Bigmemory

Handling big data sets has always been a concern for R users. Once the size of the data set reaches above 50% of RAM, it is considered “massive” and can literally become impossible to work with on a standard machine. The bigmemory project, by Michael Kane and Jay Emerson, is one approach to dealing with this class of data set. Last Monday, December 13th, the New England R Users Group warmly welcomed Michael Kane to talk about bigmemory and R.

Bigmemory is one package (of 5 in the bigmemory project) which is designed to extend R to better handle large data sets. The core data structures are written in C++ and allow R users to create matrix-like data objects (called big.matrix). These big.matrix objects are compatible with standard R matrices, allowing them to be used wherever a standard matrix can. The backing store for a big.matrix is a memory-mapped file, allowing it to take on sizes much larger than available RAM.

Mike discussed the use of bigmemory on the well-known Airline on-time data which includes over 20 years of data on roughly 120 million commercial US airline flights. The data set is roughly 12 GB in size and considering that read.table() recommends the maximum data size to be 10%-20% of RAM, it is nearly impossible to work with on a standard machine. However, bigmemory allows you to read in and analyze the data without problems.

Mike also showed how bigmemory can be used with the MapReduce (or split-apply-combine) method to greatly reduce the time required by many statistical calculations. For example, if one were trying to determine if older planes suffer greater delays, you need to know how old each of the 13,000 planes are. This calculation, running on a standard 1 core system is estimated to require nearly 9 hours to compute. Even when running in parallel on 4 cores, it can take nearly 2 hours. However, using bigmemory and the split-apply-combine method, the computation takes a little over one minute!

The bigmemory project was recently awarded the 2010 John M. Chambers Statistical Software Award and was presented to Mike at the 2010 Joint Statistical Meetings held in August.

R at Google

Last night, Ni Wang and Max Lin from Google gave a talk to the New York R User Group discussing how R is used inside Google. About 150 R developers attended the meeting. Ni and Max said that R is used very widely at Google and is an integral part of the analytics work they do.

One interesting application is the Google Flu Trends project, which uses R to estimate current flu activity based on Google search results. Google Trends aggregates user search queries showing how often a particular word or phrase has been searched. Correlation tests are run on the search results to obtain a manageable data set of potentially relevant variables. Then using R, they massage the data and create models with optimized weights for each search term. From this, they are able to reasonably estimate current flu activity for different regions around the world.

When Google uses R in a production environment, they often work with very large data sets. For this, Google integrates R with several internal technologies including gfs, BigTable and ProtoBuf (using the RProtoBuf package). They said their internal system for analyzing large data sets worked in a manner very analogous to the R snow package.

Google also announced an R client for the Google Prediction API (a service which accesses Google’s machine learning algorithms to analyze historic data and predict future outcomes). The R client is available here: http://code.google.com/p/google-prediction-api-r-client/

Final note, Google has published an R Style Guide which may be of interest for those seeking a set of standards for R coding: http://google-styleguide.googlecode.com/svn/trunk/google-r-style.html

Online Resources for Learning R

Online classes are an easy and convenient way to learn more about a topic of interest. Not surprisingly, there are a variety of online resources, free and otherwise, to learn more about R. From online graduate classes, to the more “learn at your own pace” approach, here are some resources I have found useful:

Programming R – Beginner to advanced resources for the R programming language

Statistics.com – Variety of courses including beginner and advance topics in R

- Programming in R – Intermediate level programming

- R graphics – Learn to fully customize plots and graphics

- ggplot2 – In depth course taught by Dr. Hadley Wickham

University of Washington – Certificate in Computational Finance with R Programming

Carnegie Mellon – Open and Free Courses including teaching Statistics with R

Penn State – Online Learning through the Department of Statistics

New England R Users Group Meeting

Attended and thoroughly enjoyed Tuesday night’s New England R Users Group. We meet monthly in the Boston area to discuss the various ways in which people use and interact with the R programming language. Not surprisingly, we have a variety of industries represented. One of us is using R to recognize patterns in tissue samples to indicate the formation of tumors. Another is looking into how super computers can be used with R to enhance data analysis. Others, from the finance world, use tick data (over 80 GB in size) to make inferences about different securities in the stock market. The list goes on. Clearly we have a very diverse group!

One common issue many of our member’s face is how to deal with big data sets. Obviously there are various packages available related to this issue such as ff and bigmemory. However, most of us have limited knowledge on how to actually utilize these resources. Thus, our next meeting will have some short presentations providing a general overview of the various approaches to dealing with big data sets. I am looking forward to it and am sure it will be insightful.